Aether is a minimalist PaaS (Platform as a Service) offering, inspired by Vercel and Netlify. It allows you to deploy your npm projects effortlessly by simply providing a public repository URL.

Features ✨

- One-click deployments from Git repositories 🖱️

- Automatic build and deployment pipeline 🔄

- Real-time log streaming for easy debugging 📊

- Scalable infrastructure that grows with your needs 📈

- Comprehensive monitoring and analytics 🔍

- Zero-configuration required for most projects ⚙️

Tech Stack 🛠️

- Frontend: NextJS 14, TypeScript, NextUI, Clerk (auth), Tailwind CSS, Zustand, React Query, Framer Motion

- Backend: Node.js, Go, Fastify, Chi

- Database: PostgreSQL

- ORM: Drizzle

- Message Queue: AWS SQS

- Streaming: AWS Kinesis

- Storage: AWS S3

- Container Orchestration: Kubernetes (AWS EKS)

- CI/CD: GitHub Actions, ArgoCD

- Infrastructure as Code: Terraform

- Cloud Provider: AWS

- Inter-service Communication: gRPC, Protocol Buffers

- Auto-scaling: Horizontal Pod Autoscaler (HPA), KEDA

- Monitoring: Prometheus, Grafana

Microservices 🌐

- Frontstage: Public-facing NextJS app for the user interface

- Launchpad: Fastify app for project CRUD operations, uses gRPC for communication with other services

- Forge: Go app for building and deploying projects, uses gRPC for communication with other services

- Logify: Go app for log streaming and aggregation, uses gRPC for communication with other services

- Proxy: Go app serving as a reverse proxy for deployed projects

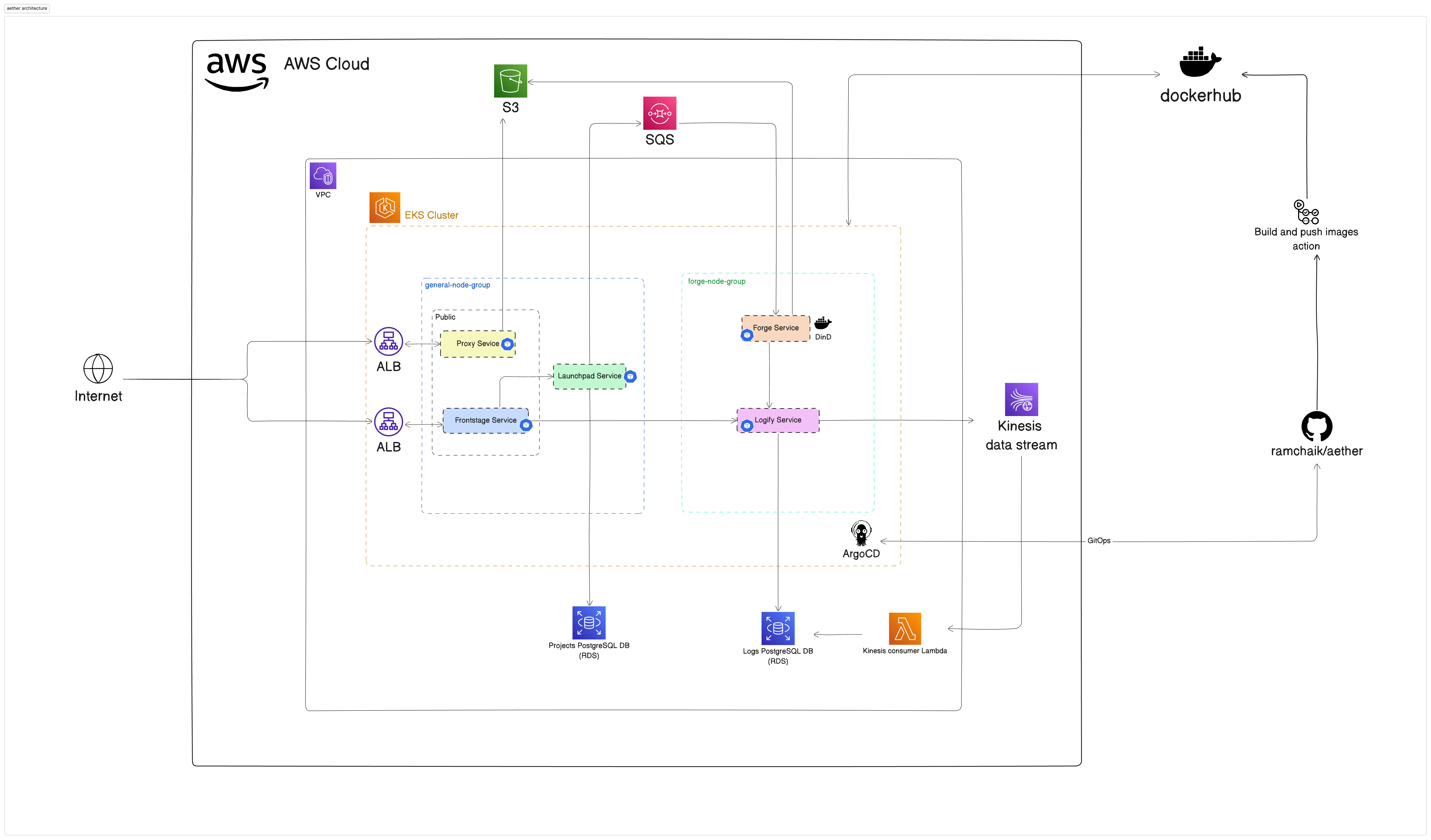

Architecture

Aether uses GitHub Actions for CI/CD, building and pushing Docker images to DockerHub. ArgoCD is used for GitOps, linking the GitHub repository to the EKS cluster.

All infrastructure is provisioned using Terraform, with Kubernetes manifests for deploying to AWS EKS.

Auto-scaling ⚖️

Aether implements intelligent auto-scaling to ensure optimal resource utilization and responsiveness:

- Horizontal Pod Autoscaler (HPA): Most pods use CPU metrics for scaling, ensuring efficient resource usage across the cluster.

- KEDA (Kubernetes Event-driven Autoscaling): The Forge service utilizes KEDA with AWS SQS triggers, allowing it to scale based on the number of messages in the queue.